Basics of Linear Algebra for Machine Learning

Linear Algebra for Machine

Learning

Sargur N. Srihari

srihari@cedar.buffalo.edu

Importance of Linear Algebra in ML

Topics in Linear Algebra for ML

- Why do we need Linear Algebra?

- From scalars to tensors

- Flow of tensors in ML

- Matrix operations: determinant, inverse

- Eigen values and eigen vectors

- Singular Value Decomposition

- Principal components analysis

What is linear algebra?

- Linear algebra is the branch of mathematics

concerning linear equations such as

a1x1+…..+anxn

=b

– In vector notation we say aTx=b

– Called a linear transformation of x - Linear algebra is fundamental to geometry, for

defining objects such as lines, planes, rotations

4

Linear equation a1x1+…..+anxn=b

defines a plane in (x1,..,xn) space

Straight lines define common solutions

to equations

Why do we need to know it?

- Linear Algebra is used throughout engineering

– Because it is based on continuous math rather than

discrete math - Computer scientists have little experience with it

- Essential for understanding ML algorithms

– E.g., We convert input vectors (x1,..,xn) into outputs

by a series of linear transformations - Here we discuss:

– Concepts of linear algebra needed for ML

– Omit other aspects of linear algebra

Machine Learning Srihari Linear Algebra Topics – Scalars, Vectors, Matrices and Tensors

– Multiplying Matrices and Vectors

– Identity and Inverse Matrices

– Linear Dependence and Span

– Norms

– Special kinds of matrices and vectors

– Eigendecomposition

– Singular value decomposition

– The Moore Penrose pseudoinverse

– The trace operator

– The determinant

– Ex: principal components analysis

Machine Learning Srihari

Scalar

- Single number

– In contrast to other objects in linear algebra,

which are usually arrays of numbers - Represented in lower-case italic x

– They can be real-valued or be integers - E.g., let be the slope of the line

– Defining a real-valued scalar - E.g., let be the number of units

– Defining a natural number scalar

7

x ∈!

n ∈!

Machine Learning Srihari Vector

- An array of numbers arranged in order

- Each no. identified by an index

- Written in lower-case bold such as x

– its elements are in italics lower case, subscripted - If each element is in R then x is in Rn

- We can think of vectors as points in space

– Each element gives coordinate along an axis

x =[x1 X2 x n]

If each element is in R then x is in Rn

- We can think of vectors as points in space

– Each element gives coordinate along an axis

Machine Learning Srihari

Matrices

- 2-D array of numbers

– So each element identified by two indices - Denoted by bold typeface A

– Elements indicated by name in italic but not bold - A1,1 is the top left entry and Am,n is the bottom right entry

- We can identify nos in vertical column j by writing : for the

horizontal coordinate - E.g.,

- Ai: is i

th row of A, A:j is j

th column of A - If A has shape of height m and width n with real-

values then 9

A= A1,1 A1,2

A2,1 A2,2

A∈!m×n

Machine Learning Srihari

Tensor

- Sometimes need an array with more than two

axes

– E.g., an RGB color image has three axes - A tensor is an array of numbers arranged on a

regular grid with variable number of axes

– See figure next - Denote a tensor with this bold typeface: A

- Element (i,j,k) of tensor denoted by Ai,j,k

10

Machine Learning Srihari

Numpy library in Python for tensors

– Zero-dimensional tensor

- import numpy as np

x = np.array(100)

print(“Array:”, x)

print(“Dimension:”, x.ndim) - Output

Array: 100

Dimension 0

– One-dimensional tensor - import numpy as np

x = np.array([1,5,2,7,11,24,25,12])

print(“Array:”, x)

print(“Dimension:”, x.ndim) - Output

Array: [ 1 5 2 7 11 24 25 12]

Dimension 1

12

– Two-dimensional tensor - import numpy as np

x = np.array(

[

[1,5,2,7,11,24,25,12],

[1,2,3,4,5,6,7,8]

]

) - print(“Array:”, x)

print(“Dimension:”, x.ndim) - Output

Array: [[ 1 5 2 7 11 24 25 12] [ 1 2 3 4

5 6 7 8]]

Dimension 2

Machine Learning Srihari

Transpose of a Matrix

- An important operation on matrices

- The transpose of a matrix A is denoted as AT

- Defined as

(AT)i,j

=Aj,i

– The mirror image across a diagonal line - Called the main diagonal , running down to the right

starting from upper left corner

13

A =A1,1 A1,2 A1,3

A2,1 A2,2 A2,3

A3,1 A3,2 A3,3

⎥

⇒ AT =A1,1 A2,1 A3,1

A1,2 A2,2 A3,2

A1,3 A2,3 A3,3

⎡

⎣

A =A1,1 A1,2

A2,1 A2,2

A3,1 A3,2

⎢

⇒ AT =A1,1 A2,1 A3,1

A1,2 A2,2 A3,2

Vectors as special case of matrix

- Vectors are matrices with a single column

- Often written in-line using transpose

x = [x1,..,xn]T - A scalar is a matrix with one element

a=aT

14

x =x1x2xn

⎥

⇒ x T = x1 ,x2 ,..xn ⎡

⎣ ⎤

⎦

Machine Learning Srihari

Matrix Addition

- We can add matrices to each other if they have

the same shape, by adding corresponding

elements

– If A and B have same shape (height m, width n) - A scalar can be added to a matrix or multiplied

by a scalar - Less conventional notation used in ML:

– Vector added to matrix - Called broadcasting since vector b added to each row of A

15

C = A+B ⇒Ci,j

= Ai,j

+Bi,j

D=aB+c ⇒Di,j

=aBi,j

+c

C = A+b ⇒Ci,j = Ai,j +bj

Multiplying Matrices

- For product C=AB to be defined, A has to have

the same no. of columns as the no. of rows of B

– If A is of shape mxn and B is of shape nxp then

matrix product C is of shape mxp

– Note that the standard product of two matrices is

not just the product of two individual elements - Such a product does exist and is called the element-wise

product or the Hadamard product A¤B

Machine Learning

Multiplying Vectors

- Dot product between two vectors x and y of

same dimensionality is the matrix product xTy - We can think of matrix product C=AB as

computing Cij the dot product of row i of A and

column j of B

Matrix Product Properties

- Distributivity over addition: A(B+C)=AB+AC

- Associativity: A(BC)=(AB)C

- Not commutative: AB=BA is not always true

- Dot product between vectors is commutative:

xTy=yTx - Transpose of a matrix product has a simple

form: (AB)T=BTAT

Linear Transformation

- Ax=b

– where and

– More explicitly - Sometimes we wish to solve for the unknowns

x ={x1,..,xn} when A and b provide constraints

20

A∈!n×n b∈!n

A11x1 - A12 x2

+….+ A1n xn =b1

A21

x1 - A22 x2

+….+ A2n xn =b2

An1

x1 - Am2 x2 +….+ An,n xn =bn

n equations in

n unknowns

A =A1,1 ! A1,n” ” “An,1 ! Ann

⎡

⎣

x=x1″xn

⎡

⎣

b=b1″bn - ⎣

n x n n x 1 n x 1

Can view A as a linear transformation

of vector x to vector b

This pervasive and powerful form of artificial intelligence is changing every industry. Here’s what you need to know about the potential and limitations of machine learning and how it’s being used.

What is machine learning?

Machine learning is a subfield of artificial intelligence, which is broadly defined as the capability of a machine to imitate intelligent human behavior. Artificial intelligence systems are used to perform complex tasks in a way that is similar to how humans solve problems.

The goal of AI is to create computer models that exhibit “intelligent behaviors” like humans, according to Boris Katz, a principal research scientist and head of the InfoLab Group at CSAIL. This means machines that can recognize a visual scene, understand a text written in natural language, or perform an action in the physical world.

Machine learning is one way to use AI. It was defined in the 1950s by AI pioneer Arthur Samuel as “the field of study that gives computers the ability to learn without explicitly being programmed.”

The definition holds true, according toMikey Shulman, a lecturer at MIT Sloan and head of machine learning at Kensho, which specializes in artificial intelligence for the finance and U.S. intelligence communities. He compared the traditional way of programming computers, or “software 1.0,” to baking, where a recipe calls for precise amounts of ingredients and tells the baker to mix for an exact amount of time. Traditional programming similarly requires creating detailed instructions for the computer to follow.

But in some cases, writing a program for the machine to follow is time-consuming or impossible, such as training a computer to recognize pictures of different people. While humans can do this task easily, it’s difficult to tell a computer how to do it. Machine learning takes the approach of letting computers learn to program themselves through experience.

SearchShow Main Navigationclose search

Machine learning, explained

Why It Matters

This pervasive and powerful form of artificial intelligence is changing every industry. Here’s what you need to know about the potential and limitations of machine learning and how it’s being used.Share

Machine learning is behind chatbots and predictive text, language translation apps, the shows Netflix suggests to you, and how your social media feeds are presented. It powers autonomous vehicles and machines that can diagnose medical conditions based on images.

When companies today deploy artificial intelligence programs, they are most likely using machine learning — so much so that the terms are often used interchangeably, and sometimes ambiguously. Machine learning is a subfield of artificial intelligence that gives computers the ability to learn without explicitly being programmed.

“In just the last five or 10 years, machine learning has become a critical way, arguably the most important way, most parts of AI are done,” said MIT Sloan professorThomas W. Malone, the founding director of the MIT Center for Collective Intelligence. “So that’s why some people use the terms AI and machine learning almost as synonymous … most of the current advances in AI have involved machine learning.”AI at WorkResearch and insights powering the intersection of AI and business, delivered monthly.Yes, I’d also like to subscribe to the Thinking Forward newsletterEmail

With the growing ubiquity of machine learning, everyone in business is likely to encounter it and will need some working knowledge about this field. A 2020 Deloitte survey found that 67% of companies are using machine learning, and 97% are using or planning to use it in the next year.

From manufacturing to retail and banking to bakeries, even legacy companies are using machine learning to unlock new value or boost efficiency. “Machine learning is changing, or will change, every industry, and leaders need to understand the basic principles, the potential, and the limitations,” said MIT computer science professor Aleksander Madry, director of the MIT Center for Deployable Machine Learning.

While not everyone needs to know the technical details, they should understand what the technology does and what it can and cannot do, Madry added. “I don’t think anyone can afford not to be aware of what’s happening.”

That includes being aware of the social, societal, and ethical implications of machine learning. “It’s important to engage and begin to understand these tools, and then think about how you’re going to use them well. We have to use these [tools] for the good of everybody,” said Dr. Joan LaRovere, MBA ’16, a pediatric cardiac intensive care physician and co-founder of the nonprofit The Virtue Foundation. “AI has so much potential to do good, and we need to really keep that in our lenses as we’re thinking about this. How do we use this to do good and better the world?”

What is machine learning?

Machine learning is a subfield of artificial intelligence, which is broadly defined as the capability of a machine to imitate intelligent human behavior. Artificial intelligence systems are used to perform complex tasks in a way that is similar to how humans solve problems.

The goal of AI is to create computer models that exhibit “intelligent behaviors” like humans, according to Boris Katz, a principal research scientist and head of the InfoLab Group at CSAIL. This means machines that can recognize a visual scene, understand a text written in natural language, or perform an action in the physical world.

Machine learning is one way to use AI. It was defined in the 1950s by AI pioneer Arthur Samuel as “the field of study that gives computers the ability to learn without explicitly being programmed.”

The definition holds true, according toMikey Shulman, a lecturer at MIT Sloan and head of machine learning at Kensho, which specializes in artificial intelligence for the finance and U.S. intelligence communities. He compared the traditional way of programming computers, or “software 1.0,” to baking, where a recipe calls for precise amounts of ingredients and tells the baker to mix for an exact amount of time. Traditional programming similarly requires creating detailed instructions for the computer to follow.

But in some cases, writing a program for the machine to follow is time-consuming or impossible, such as training a computer to recognize pictures of different people. While humans can do this task easily, it’s difficult to tell a computer how to do it. Machine learning takes the approach of letting computers learn to program themselves through experience.

Machine learning starts with data — numbers, photos, or text, like bank transactions, pictures of people or even bakery items, repair records, time series data from sensors, or sales reports. The data is gathered and prepared to be used as training data, or the information the machine learning model will be trained on. The more data, the better the program.

From there, programmers choose a machine learning model to use, supply the data, and let the computer model train itself to find patterns or make predictions. Over time the human programmer can also tweak the model, including changing its parameters, to help push it toward more accurate results. (Research scientist Janelle Shane’s website AI Weirdness is an entertaining look at how machine learning algorithms learn and how they can get things wrong — as happened when an algorithm tried to generate recipes and created Chocolate Chicken Chicken Cake.)

Some data is held out from the training data to be used as evaluation data, which tests how accurate the machine learning model is when it is shown new data. The result is a model that can be used in the future with different sets of data.

Successful machine learning algorithms can do different things, Malone wrote in a recent research brief about AI and the future of work that was co-authored by MIT professor and CSAIL director Daniela Rus and Robert Laubacher, the associate director of the MIT Center for Collective Intelligence.

“The function of a machine learning system can be descriptive, meaning that the system uses the data to explain what happened; predictive, meaning the system uses the data to predict what will happen; or prescriptive, meaning the system will use the data to make suggestions about what action to take,” the researchers wrote.

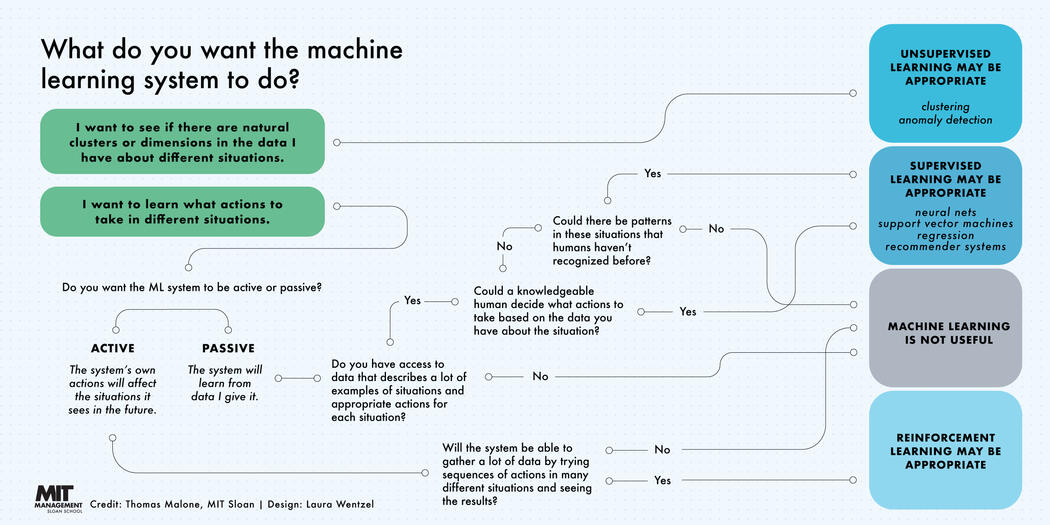

There are three subcategories of machine learning:

Supervised machine learning models are trained with labeled data sets, which allow the models to learn and grow more accurate over time. For example, an algorithm would be trained with pictures of dogs and other things, all labeled by humans, and the machine would learn ways to identify pictures of dogs on its own. Supervised machine learning is the most common type used today.

In unsupervised machine learning, a program looks for patterns in unlabeled data. Unsupervised machine learning can find patterns or trends that people aren’t explicitly looking for. For example, an unsupervised machine learning program could look through online sales data and identify different types of clients making purchases.

Reinforcement machine learning trains machines through trial and error to take the best action by establishing a reward system. Reinforcement learning can train models to play games or train autonomous vehicles to drive by telling the machine when it made the right decisions, which helps it learn over time what actions it should take.

In the Work of the Future brief, Malone noted that machine learning is best suited for situations with lots of data — thousands or millions of examples, like recordings from previous conversations with customers, sensor logs from machines, or ATM transactions. For example, Google Translate was possible because it “trained” on the vast amount of information on the web, in different languages.

In some cases, machine learning can gain insight or automate decision-making in cases where humans would not be able to, Madry said. “It may not only be more efficient and less costly to have an algorithm do this, but sometimes humans just literally are not able to do it,” he said.

Google search is an example of something that humans can do, but never at the scale and speed at which the Google models are able to show potential answers every time a person types in a query, Malone said. “That’s not an example of computers putting people out of work. It’s an example of computers doing things that would not have been remotely economically feasible if they had to be done by humans.”

Machine learning is also associated with several other artificial intelligence subfields:

Natural language processing

Natural language processing is a field of machine learning in which machines learn to understand natural language as spoken and written by humans, instead of the data and numbers normally used to program computers. This allows machines to recognize language, understand it, and respond to it, as well as create new text and translate between languages. Natural language processing enables familiar technology like chatbots and digital assistants like Siri or Alexa.

Neural networks

Neural networks are a commonly used, specific class of machine learning algorithms. Artificial neural networks are modeled on the human brain, in which thousands or millions of processing nodes are interconnected and organized into layers.

In an artificial neural network, cells, or nodes, are connected, with each cell processing inputs and producing an output that is sent to other neurons. Labeled data moves through the nodes, or cells, with each cell performing a different function. In a neural network trained to identify whether a picture contains a cat or not, the different nodes would assess the information and arrive at an output that indicates whether a picture features a cat.

Deep learning

Deep learning networks are neural networks with many layers. The layered network can process extensive amounts of data and determine the “weight” of each link in the network — for example, in an image recognition system, some layers of the neural network might detect individual features of a face, like eyes, nose, or mouth, while another layer would be able to tell whether those features appear in a way that indicates a face.

Like neural networks, deep learning is modeled on the way the human brain works and powers many machine learning uses, like autonomous vehicles, chatbots, and medical diagnostics.

“The more layers you have, the more potential you have for doing complex things well,” Malone said.

Deep learning requires a great deal of computing power, which raises concerns about its economic and environmental sustainability.

How businesses are using machine learning

Machine learning is the core of some companies’ business models, like in the case of Netflix’s suggestions algorithm or Google’s search engine. Other companies are engaging deeply with machine learning, though it’s not their main business proposition.

Others are still trying to determine how to use machine learning in a beneficial way. “In my opinion, one of the hardest problems in machine learning is figuring out what problems I can solve with machine learning,” Shulman said. “There’s still a gap in the understanding.” Leading the AI-Driven OrganizationIn person at MIT Sloan

In a 2018 paper, researchers from the MIT Initiative on the Digital Economy outlined a 21-question rubric to determine whether a task is suitable for machine learning. The researchers found that no occupation will be untouched by machine learning, but no occupation is likely to be completely taken over by it. The way to unleash machine learning success, the researchers found, was to reorganize jobs into discrete tasks, some which can be done by machine learning, and others that require a human.

Companies are already using machine learning in several ways, including:

Recommendation algorithms. The recommendation engines behind Netflix and YouTube suggestions, what information appears on your Facebook feed, and product recommendations are fueled by machine learning. “[The algorithms] are trying to learn our preferences,” Madry said. “They want to learn, like on Twitter, what tweets we want them to show us, on Facebook, what ads to display, what posts or liked content to share with us.”

Image analysis and object detection. Machine learning can analyze images for different information, like learning to identify people and tell them apart — though facial recognition algorithms are controversial. Business uses for this vary. Shulman noted that hedge funds famously use machine learning to analyze the number of cars in parking lots, which helps them learn how companies are performing and make good bets.

Fraud detection. Machines can analyze patterns, like how someone normally spends or where they normally shop, to identify potentially fraudulent credit card transactions, log-in attempts, or spam emails.

Automatic helplines or chatbots. Many companies are deploying online chatbots, in which customers or clients don’t speak to humans, but instead interact with a machine. These algorithms use machine learning and natural language processing, with the bots learning from records of past conversations to come up with appropriate responses.

Self-driving cars. Much of the technology behind self-driving cars is based on machine learning, deep learning in particular.

Medical imaging and diagnostics. Machine learning programs can be trained to examine medical images or other information and look for certain markers of illness, like a tool that can predict cancer risk based on a mammogram.

How machine learning works: promises and challenges

While machine learning is fueling technology that can help workers or open new possibilities for businesses, there are several things business leaders should know about machine learning and its limits.

Explainability

One area of concern is what some experts call explainability, or the ability to be clear about what the machine learning models are doing and how they make decisions. “Understanding why a model does what it does is actually a very difficult question, and you always have to ask yourself that,” Madry said. “You should never treat this as a black box, that just comes as an oracle … yes, you should use it, but then try to get a feeling of what are the rules of thumb that it came up with? And then validate them.”